Chat

Description

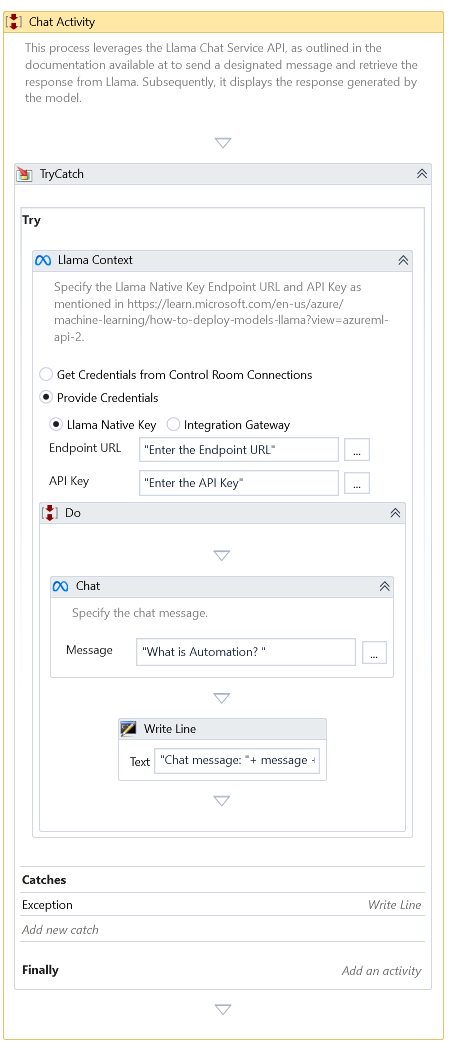

This Activity invokes the Llama Chat Service with the specified input message and returns the response received.

Properties

Input

- Message – Specify the input message to generate the chat message.

Optional

-

System Message - Specifies the contextual framework for interacting with the model, typically including directives, constraints, or essential background information to ensure accurate, consistent, and relevant responses.

-

Add To History – This flag in Llama Chat Service allows users to include interactions in the ongoing conversation history. This feature is crucial for maintaining context and continuity in the chat, enhancing the relevance and accuracy of the AI's responses. By default, this is true.

-

Use History – This flag in Llama Chat Service enables the AI to reference previous interactions in the conversation history for context. It ensures more coherent and contextually relevant responses based on past exchanges. By default, this is true.

-

Maximum Tokens – This setting in Llama Chat Service defines the maximum length of AI-generated responses. This constraint regulates the level of detail in the AI's replies, promoting concise and targeted interactions. When left empty, the setting defaults to the maximum length specified by the Model, with a default maximum token count of 256.

-

Temperature – This setting in Llama Chat Service governs the degree of creativity and diversity in the AI's responses. A higher temperature fosters extraordinary inventiveness and less predictability, whereas a lower temperature results in more consistent and anticipated outcomes. The temperature range is between 0 and 2. Values below 0 are treated as 0, while it considers values exceeding two as 2. By default, the temperature is 1.

-

Top P – This property in Llama Chat Service, also known as nucleus sampling, controls the randomness of responses by focusing on the most probable parts of the language model's predictions. Setting a high value for Top P increases diversity in responses, while a lower value results in more predictable and focused outputs. The Top P setting range in Llama Chat Service typically spans from 0 to 1. By default, Top P is 1.

-

Presence Penalty—This parameter in Llama Chat Service discourages the AI from repeating topics or phrases mentioned in the conversation. A higher value of this setting promotes a broader range of topics and ideas in the AI's responses, enhancing conversational diversity. The spectrum is between 0 and 2. It considers 0 if the value is less than 0, while it considers values exceeding two as 2. By default, the Presence Penalty is 0.

-

Continue On Error—Specifies whether the automation should continue even when the Activity throws an error. This field only supports Boolean values (True, False). The default value is False.

It does not catch an error if this Activity is present inside the Try-Catch block and the value of this property is True.

Misc

- DisplayName – Add a display name to your Activity.

- Private – By default, Activity will log the values of your properties inside your workflow. If private is selected, then it stops logging.

Output

- Chat Message – It returns the chat response generated by the Model

- Raw Response – It returns the raw response from OpenAI.

- Total Tokens returns the total Tokens processed for this chat.

Examples

Download Example